AGI Would Require a "World Model"

That's a Problem.

Welcome back to the Lamb_OS Substack! As always, I am thankful to have my subscribers and readers stop by! If you are a regular here in “Lamb_OS World”, then you – the reader – know you are the only reason I do this. So as always, thank you for visiting!

Let me begin by introducing myself as Dr. William A. Lambos. I call myself a computational neuroscientist, and I’ve been involved with the AI field (one way or another) since about 1970. As far as credentialing, you can see the footnote below if interested1. I write a lot about AI, but not exclusively. When addressing a topic, I take pains to assure my perspective is highly informed. I draw from many areas of study, and my conclusions, beliefs, and predictions often fall outside current or mainstream thinking. But these same beliefs are grounded by 50 years of study and rigorous cross training in multiple fields of study. See my previous screeds on this Substack to learn more, and judge for yourself.

Please subscribe today if you have not already yet done so. This Substack is free! So, please subscribe for whatever reason might appeal to you. But I’d hope you do so for the value it offers.

This post is the third and final one in this series, in which we have explored three attributes of brain function that cannot be implemented in predictive or generative AI: Pattern recognition, salience detection, and here, so-called “world models”. These limitations more or less cripple every attempt based on computation to replicate the adaptive and agency-driven functioning which characterizes biological intelligence.

PART III: WORLD MODELS

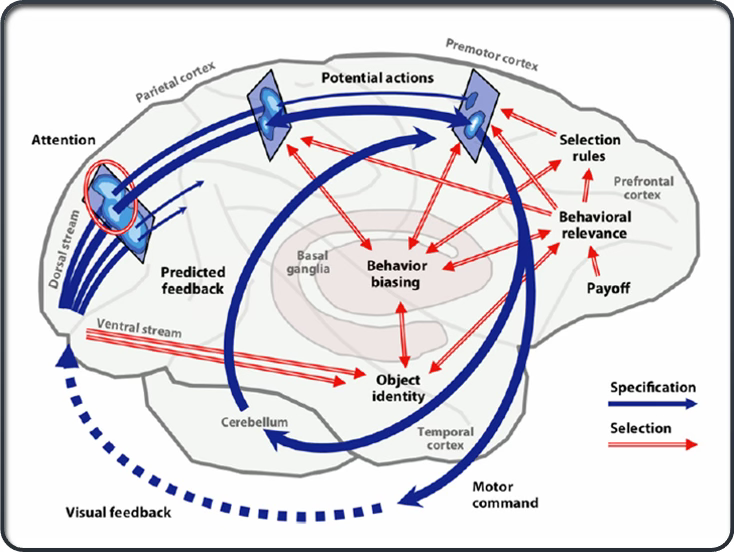

A “world model” may be loosely defined as an active, constantly changing set of internal assumptions about the world in which intelligent agents exist. These assumptions (“that thing is a microwave oven”) are based on billions of data points provided by our senses, our previous experiences, and the consequences of encountering that part of the world previously. This means that the agent itself is necessarily at the center of the model, as nothing can be perceived without reference to the perceiver (the self).

Why “assumptions” and not “internal representations”? Although it is tempting to assume that the world models of living beings, and especially primates, are stored internally as “reproductions” of the world, this just wouldn’t work. Our job is to predict reality, not re-imagine it as it was when last encountered. The world changes constantly! We need to discern how it is now. And what, perhaps, to expect next time:

Predictive coding is operating throughout the brain where the time scales of sensory input are matched-mismatched to generate updates of future predictions of homeostasis. (Thatcher, 2021).

Simply said, we living creatures begin each moment of perception with what we predict is coming from the world. That is the expectation. Then, in real time, we use new information — hopefully accurate but sometimes not — to update the prediction. If predictions are met, we never consciously notice. If the prediction was a mismatch, however, then we go into an “alert status.” Physiological arousal increases, attention is focused, frontal lobe activation takes place, the amygdala, hippocampus, and other limbic structures are activated, and the world — or rather, our model of it — changes.

As far as implementing this nifty strategy in AI models, I ask, “How?” The problem cannot be solved through the usual approach of increasing scale (what I like to call the “more cowbell strategy,” in honor of an old but venerable SNL skit). This means that cannot emulate the attributes of intelligence (perpetually absent in AI models) by adding more cowbell — more memory, more GPUs, more data, more parameters, longer training times, or more anything will not improve deep learning, transformer, or any other type of AI architecture. In order to model (or simulate, have mentations or cognitions of) the world, a system first has to know what it wants. Genes (projected into a culture) provide those wants and needs for living creatures. No such substrate is available for silicon-based systems.

Just to remind readers, I believe in the laws of physics, and I do view living creatures as automata in our own right. Theoretically, we could build brains like our own. My position is simply that the properties of living creatures we label as “intelligence” arise from energy dynamics unavailable to silicon-based systems. We live by assuring a net collection of energy, and not by disbursing it back into the universe as entropy. Because only living things can act as if the second law of thermodynamics didn’t exist (at least while alive), then something fundamental about life has not been considered in trying to create intelligence.

Longer-term readers will know I’m talking about DNA. If DNA is the basis of agency (which I firmly believe — see my other posts in this Substack on “Agency”), then without it, there can be no AGI without an analogous operator. Moreover, since DNA requires tiny compartments (cells and their nuclei) in which to operate (and which act as if each of them (the cells) are “in charge,”), it’s going to be a very long time before AGI is within reach.

So there we have it: the three mechanisms that underlie non-computational intelligence: Pattern recognition (with invariance), salience detection (based on emotion that neither requires nor can be computed without cellular building blocks containing DNA), and a functional architecture of the brain that exists first and foremost to predict the future. We do have a “world model,” but it represents the world only as needed to engender and update predictions.

If, after this series of articles, you still wish to believe that AGI poses an existential risk to humanity, I have some advice. Look at other humans first!

That’s it for this post.

See you next time!

I hold a postdoctoral certification in clinical neuropsychology and a license to practice in California and Florida. My clinical focus is helping people with brain injuries. But I did not originally train in clinical psychology. That came two decades later.

My doctoral thesis in experimental biopsychology involved determining the full set of (known) computable parameters for the associative learning process called Pavlovian conditioning. Next, our lab coded these parameters as time series events into one of the first microcomputers in an attempt to simulate such learning. This further required I complete a Master’s Program in Computation in order to finish the thesis, which I did. Finally, I hold another Masters degree — finished in 2022 — in Data Science.

I’ve been coding since mainframes were the only accessible computers and LISP was the ‘lingua franca’ of AI (ca. 1970-81). I became interested in the relationship between neuroscience and automata during childhood - the very first post in this Substack includes a partial biography. BASIC was too slow, so the coding had to be done in assembly language. Anyone who has ever coded at the level of the internal binary registers and memory locations will know why I had to learn computation to do my doctoral work in animal learning and memory.

AGI oil salesmen seem to have contracted hallucinations from their machines. Thanks for applying science to combat the psuedoscience.

Some very interesting ideas here. Let's reconnect soon, would love to have you for a guest post exploring this topic.